Korean Researchers Develop AI Semiconductor to Accelerate ChatGPT Language Model

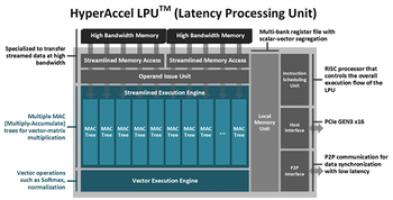

▲ Overview of the LPU structure developed by the research team (provided by KAIST) © Patent News

ChatGPT, the revolutionary technology launched by OpenAI, has become a global sensation, captivating the attention of individuals and industries alike. Unlike previous artificial intelligence systems, ChatGPT is built upon an immensely powerful language model, marking an unprecedented breakthrough in the field. However, the implementation of this model comes at a significant cost, as it requires an abundance of high-performance GPUs to operate efficiently.

On the 4th of August, KAIST made a remarkable announcement. Professor Joo-Young Kim’s research team in the Department of Electrical and Electronic Engineering has successfully developed an AI semiconductor, named the ‘LPU (Latent Processing Unit),’ that significantly accelerates the inference calculation of the giant language model, the core component of ChatGPT.

The LPU is an AI semiconductor that features a cutting-edge calculation engine capable of maximizing memory bandwidth utilization. It efficiently handles the extensive computations required for high-speed compilation and can easily be expanded to support multiple accelerators through its own networking capabilities. By utilizing this LPU-based acceleration tool server, performance can be increased by up to 50% compared to a supercomputer based on the leading high-performance GPU, NVIDIA A100. Moreover, the price-performance ratio can be improved by 2.4 times. This breakthrough has the potential to replace high-performance GPUs in data centers experiencing a surging demand for productive AI services.

The HyperExcel research team, led by Professor Kim Joo-young, achieved this significant milestone. Their groundbreaking work was recognized at the prestigious International Conference on Semiconductor Design Automation (DAC) held in San Francisco on July 12. The team received the Best Presentation in Engineering Award, solidifying their position as pioneers in AI semiconductor technology for large language models.

The DAC conference serves as a prominent platform for showcasing state-of-the-art advancements in semiconductor design, particularly in the realm of Electronic Design Automation (EDA) and Semiconductor Intellectual Property (IP) technologies. Esteemed companies such as Intel, Nvidia, AMD, Google, Microsoft, Samsung, and TSMC participate in DAC, along with leading universities like Harvard, MIT, and Stanford.

The recognition garnered by Professor Kim’s team as the sole recipient of the award for AI semiconductor technology in large language models reflects the immense significance of their breakthrough in the world of semiconductor technologies. Their solution promises to alleviate the exorbitant costs associated with large language model assembly.

Expressing his grand ambitions, Professor Kim Joo-young of KAIST stated, “With the new ‘LPU’ processor dedicated to massive artificial intelligence calculations, we aim to spearhead the global market and surpass the technological prowess of big tech companies.”

▲ Overview of the LPU structure developed by the research team (provided by KAIST) © Patent News

ChatGPT, launched by OpenAI, is a hot topic around the world, and everyone is paying attention to the changes this technology brings. This technology is based on a grand language model. Unlike existing artificial intelligence, the giant language model is an unprecedented large-scale artificial intelligence model. To implement it, a large number of high-performance GPUs are required, leading to astronomical computing costs.

KAIST announced on the 4th that Professor Joo-Young Kim’s research team in the Department of Electrical and Electronic Engineering has developed an AI semiconductor that accelerates the process of efficiently computing the conclusions of the large language model used as the core of ChatGPT.

The AI semiconductor ‘LPU (Latent Processing Unit)’ developed by the research team efficiently accelerates the inference calculation of the giant language model. It is an AI semiconductor with a calculation engine that can maximize the use of memory bandwidth and perform all the calculations necessary for high-speed compilation, and is easily expandable to multiple accelerators with its own networking. This LPU-based acceleration tool server increases performance up to 50% and price / performance 2.4 times compared to a supercomputer based on NVIDIA A100, the industry’s leading high-performance GPU. This is expected to be able to replace high-performance GPUs in data centers where the demand for productive AI services is increasing rapidly.

This research was carried out by Professor Kim Joo-young’s new company, HyperExcel, and won the Best Presentation in Engineering Award at the International Conference on Semiconductor Design Automation (DAC) held in San Francisco on July 12, US time .I had the pleasure of

DAC is a representative conference in the field of international semiconductor design, and is a conference that showcases state-of-the-art semiconductor design technologies, particularly in relation to Electronic Design Automation (EDA) and Semiconductor Intellectual Property technologies. conductors (IP). World-renowned semiconductor design companies such as Intel, Nvidia, AMD, Google, Microsoft, Samsung, and TSMC participate in DAC, and many of the world’s top universities such as Harvard University, MIT, and Stanford University are also take part.

Among world-class semiconductor technologies, it is very meaningful that Professor Kim’s team is the only one to receive the award as AI semiconductor technology for large language models. With this award, it was recognized on the world stage as an AI semiconductor solution that can significantly reduce the huge cost required for large language model assembly.

KAIST Professor Kim Joo-young expressed his great ambition, saying, “We will pioneer the global market with the new ‘LPU’ processor for massive artificial intelligence calculations in the future and get ahead of technological power big tech companies.”

#Development #ChatGPT #core #semiconductor #times #costeffective #Patent #News