KAIS Develops Core AI Semiconductor for ChatGPT, 2.4 Times More Cost-Effective

As the world focuses on the advancements of ChatGPT technology, KAIS has introduced a groundbreaking core AI semiconductor that boasts a 2.4 times greater cost-effectiveness.

Revolutionary AI Semiconductor to Accelerate Computation for ChatGPT

Leading a team of researchers, Professor Joo-Young Kim from KAIST’s Department of Electrical and Electronic Engineering recently announced the development of an AI semiconductor specifically engineered to enhance the computation speed of the large language model utilized as ChatGPT’s core.

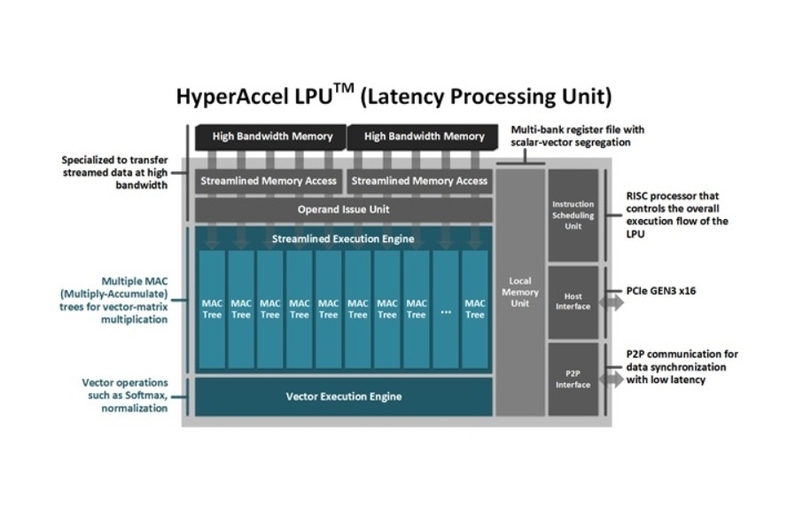

The AI semiconductor, known as the ‘LPU (Latent Processing Unit)’, effectively accelerates the computation of large language model inferences. Featuring a calculation engine that optimizes memory bandwidth utilization and executes all the necessary calculations for rapid compilation, the LPU is also easily expandable with its own networking capabilities.

Impressive Performance and Cost Efficiency

This acceleration tool server, powered by the LPU, offers a remarkable performance increase of up to 50%, surpassing the industry-leading high-performance GPU, the NVIDIA A100. Moreover, it demonstrates an impressive price-to-performance ratio 2.4 times higher than that of a traditional supercomputer based on the NVIDIA A100. This breakthrough has the potential to replace high-performance GPUs in data centers, where the demand for efficient AI services is rapidly growing.

Award-Winning Innovation

The research conducted by Professor Kim Joo-young’s company, HyperExcel, was honored with the best presentation award in the engineering category at the prestigious International Semiconductor Design Automation Conference (DAC) held in San Francisco on July 12. DAC is renowned for showcasing cutting-edge semiconductor design technology, including electronic design automation (EDA) and semiconductor design asset technology (IP). Notable participants include Intel, Nvidia, AMD, Google, Microsoft, Samsung, TSMC, as well as top universities like Harvard, MIT, and Stanford.

Recognition in Semiconductor Technology for Large Language Models

Professor Kim’s team stands out as the sole recipient of the award in the field of AI semiconductor technology for large language models, among other exceptional semiconductor technologies worldwide. This recognition solidifies their position on the global stage as providers of an AI semiconductor solution capable of significantly reducing the substantial costs associated with large language model deployment.

Pioneering the Future of AI Calculations

Expressing his ambition, Professor Kim Joo-young of KAIST stated, “With our innovative ‘LPU’ processor, we aim to lead the global market in future large-scale artificial intelligence computations and preempt power technology implemented by prominent tech companies.”

Reporter Geun-Nan Lim, Hellotti News |

While the world is paying attention to the changes that ChatGPT technology brings, KAIS has developed a core AI semiconductor for ChatGPT that is 2.4 times more cost-effective.

A research team led by Professor Joo-Young Kim from KAIST’s Department of Electrical and Electronic Engineering announced on the 4th that they have developed an AI semiconductor that efficiently accelerates the computation of the inference of the large language model used as the core of ChatGPT .

The ‘LPU (Latent Processing Unit)’ AI semiconductor developed by Professor Kim’s research team accelerates the efficient computation of large language model inferences. It is an AI semiconductor with a calculation engine that can maximize the use of memory bandwidth and perform all the calculations required for high-speed compilation, and is easily expandable to multiple accelerators with its own networking.

This LPU-based acceleration tool server increases performance up to 50% and price / performance 2.4 times compared to a supercomputer based on NVIDIA A100, the industry’s leading high-performance GPU. This is expected to be able to replace high-performance GPUs in data centers, where the demand for productive AI services is increasing rapidly.

This research was carried out by Professor Kim Joo-young’s new company, HyperExcel, and achieved the feat of winning the award for best presentation in the engineering category at the International Semiconductor Design Automation Conference (DAC) held in San Francisco on July 12, US time. .

DAC is a representative conference in the field of international semiconductor design, and is a conference that showcases state-of-the-art semiconductor design technology, particularly in relation to electronic design automation (EDA) and semiconductor design asset technology. conductors (IP). World-renowned semiconductor design companies such as Intel, Nvidia, AMD, Google, Microsoft, Samsung, and TSMC participate in DAC, and many of the world’s top universities such as Harvard University, MIT, and Stanford University are also take part.

Among world-class semiconductor technologies, it is very meaningful that Professor Kim’s team is the only one to receive the award as AI semiconductor technology for large language models. With this award, it was recognized on the world stage as an AI semiconductor solution that can significantly reduce the huge cost required for large language model assembly.

Professor Kim Joo-young of KAIST expressed his aspirations by saying, “We will pioneer the global market with the new processor ‘LPU’ for future large-scale artificial intelligence calculations and preempt power technology of big tech companies.”

Reporter Geun-Nan Lim from Hellotti |

#KAIST #develops #ChatGPT #core #semiconductor #times #costeffective