KAIST Research Team Successfully Develops AI Semiconductor for Enhanced Computation of Large Language Model (LLM) Inference

The Korea Institute of Advanced Science and Technology (KAIST) has made a groundbreaking advancement in artificial intelligence (AI) technology. Led by Professor Joo-Young Kim, the Department of Electrical and Electronic Engineering’s research team has successfully developed an AI semiconductor that significantly improves the computation speed of large language model (LLM) inference.

The team’s creation, called the Latent Processing Unit (LPU), serves as an AI semiconductor specifically designed to enhance LLM reasoning operations. The LPU boasts a powerful calculation engine that maximizes memory bandwidth utilization, enabling it to perform all the necessary calculations for high-speed compilation. Moreover, its inherent networking capability allows for easy expansion to multiple accelerators.

Notably, the LPU-based accelerator server offers exceptional performance, with an up to 50% increase in speed and a 2.4 times better price/performance ratio compared to Nvidia’s A100 high-performance GPU-based supercomputer. KAIST confidently states that this innovation has the potential to replace high-performance GPUs in data centers, particularly those experiencing a rapid rise in demand for efficient AI services.

This groundbreaking research led by Professor Kim Joo-Young’s HyperExcel has garnered recognition on the global stage. The team received the award for best presentation in the engineering category at the prestigious International Semiconductor Design Automation Conference (DAC) held in San Francisco, USA. DAC serves as a platform to showcase cutting-edge semiconductor design technologies, including electronic design automation (EDA) and semiconductor design asset technologies (IP). The conference is attended by renowned semiconductor design companies such as Intel, Nvidia, AMD, Google, Microsoft, Samsung, and TSMC, as well as prestigious academic institutions like Harvard University, MIT, and Stanford University.

KAIST highlights the significance of being the sole recipient of the AI semiconductor technology award for LLM at DAC. With this accolade, the research team’s AI semiconductor solution has gained global recognition as a cost-effective alternative for LLM operations.

Expressing his vision and ambition, Professor Kim Joo-Young shares, “We aim to lead the global market with our new LPU processor, designed for high-performance artificial intelligence calculations, and surpass the technological prowess of major tech companies.”

This remarkable achievement signifies a significant leap forward in the field of AI technology, with the potential to revolutionize various industries and pave the way for advancements in machine learning and natural language processing.

Jang Se-min, Reporter

semim99@aitimes.com

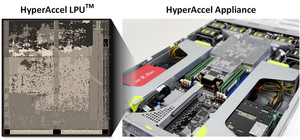

LPU chips and accelerator developed by the research team (Photo = KAIST)

The Korea Institute of Advanced Science and Technology (KAIST, President Lee Kwang-hyung) announced on the 4th that a research team led by Professor Joo-Young Kim from the Department of Electrical and Electronic Engineering has developed an artificial intelligence (AI) semiconductor that’ n efficiently speeds up the computation of a large language model (LLM) inference.

The AI semiconductor ‘LPU (Latent Processing Unit)’ developed by the research team efficiently accelerates the LLM reasoning operation. It is an AI semiconductor with a calculation engine that can maximize the use of memory bandwidth and perform all the calculations necessary for high-speed compilation, and is easily expandable to multiple accelerators with its own built-in networking.

This LPU-based accelerator server is said to have increased performance by up to 50% and 2.4 times price/performance compared to Nvidia’s ‘A100’ high-performance GPU-based supercomputer. KAIST emphasized, “We expect it to be able to replace high-performance GPUs in data centers where the demand for productive AI services is increasing rapidly.”

Overview of the LPU structure developed by the research team (Photo = KAIST)

This research was carried out by HyperExcel, founded by Professor Kim Joo-young, and won the award for the best presentation in the engineering category at the ‘International Semiconductor Design Automation Conference (DAC)’ held in San Francisco, USA on the 12th from the end. month.

DAC is a representative conference in the field of international semiconductor design, and is a conference that showcases state-of-the-art semiconductor design technology, especially related to electronic design automation (EDA) and semiconductor design asset technology. conductors (IP). World-renowned semiconductor design companies such as Intel, Nvidia, AMD, Google, Microsoft, Samsung, and TSMC participate in DAC, as well as Harvard University, MIT, and Stanford University.

Ph.D candidate Seungjae Moon receiving an award at the DAC Society (Photo = KAIST)

KAIST emphasized that it was very meaningful that Professor Kim’s team was the only one to receive the award for the AI semiconductor technology for LLM. With this award, it has been recognized on the world stage as an AI semiconductor solution that will greatly reduce the cost required for an LLM collection.

Professor Kim Joo-young expressed his ambition, saying, “We will pioneer the global market with the new ‘LPU’ processor for massive artificial intelligence calculations in the future and get ahead of the technological power of tech companies big.”

Reporter Jang Se-min semim99@aitimes.com

#KAIST #developing #semiconductors #times #efficient #GPUs