(Image = Nvidia)

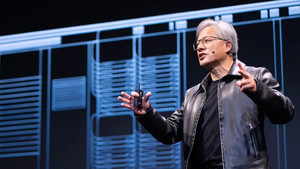

Major technology companies such as AWS, Google, Microsoft (MS), Oracle, and IBM are accelerating collaboration with NVIDIA to deliver artificial intelligence (AI) computing infrastructure, software, and services announced at GTC.

VentureBeat presented the partnership between NVIDIA and major technology companies announced at GTC on the 20th (local time).

■ AWS

Nvidia said AWS will offer a new Grace Blackwell platform with 72 Blackwell GPUs and 36 Grace CPUs on EC2 instances. This allows customers to build and run real-time inference on large trillion-parameter language models (LLMs) faster, at scale, and at a lower cost than previous generation GPUs.

In addition, the two companies collaborated to introduce 20,736 GB200 superchips into ‘Projct Ceiba’, an AI supercomputer built especially for AWS, and to integrate inference microservices Amazon SageMaker and NVIDIA NIM, which was announced to do so.

■ Google Cloud

Like Amazon, Google also announced that it is introducing NVIDIA’s Grace Blackwell Platform and NIM microservices to its cloud infrastructure. We are also adding support for JAX, a Python framework for high-performance LLM training, to NVIDIA H100 GPUs, and the NVIDIA NeMo framework across platforms via Google Kubernetes Engine (GKE) and Google Cloud HPC Toolkit. it would make it easier to distribute.

Vertex AI now supports Google Cloud A3 VMs running on NVIDIA H100 GPUs and G2 VMs running on NVIDIA L4 Tensor Core GPUs.

(Image = Nvidia)

■ MS

Microsoft plans to add NIM and Grace Blackwell Superchip microservices to the Azure cloud. The partnership for Superchip also includes NVIDIA’s new Quatum-X800 InfiniBand networking platform. We also announced the availability of the newly launched Omnibus Cloud API on the Azure Power Platform, simplifying the development of custom AI models through native integration between DGX Cloud and MS Fabric.

In healthcare, Microsoft will also enable Azure to use NVIDIA’s Clara microservices suite and the DGX cloud to help healthcare providers, pharmaceutical and biotechnology companies, and medical device developers rapidly innovate across clinical research and treatment delivery, he said.

■ Oracle

Oracle announced that it plans to use the Grace Blackwell computing platform in OCI superclusters and OCI compute instances, and that OCI compute instances plan to adopt the Nvidia GB200 superchip and B200 Tensor Core GPU. It is also provided on NVIDIA DGX Cloud OCI.

In addition, OCI plans to provide NeMo Retriever, NVIDIA NIM, and CUDA-X microservices for using the RAG collection to help OCI customers build productive AI applications.

■ SAP

SAP is working with NVIDIA to integrate productive AI into its cloud solutions, including the latest versions of SAP Datasphere, SAP Business Technology Platform, and RISE with SAP.

The company also said it plans to build additional generative AI capabilities within SAP BTP using NVIDIA’s Generative AI Foundry service, which includes DGX Cloud AI supercomputing, NVIDIA AI enterprise software, and NVIDIA AI Foundation models.

(Image = Nvidia)

■ Siemens

Siemens plans to expand its partnership with NVIDIA to introduce the new NVIDIA Omniverse Cloud API to the applications of the Siemens Xcelerator platform, including the life cycle management in the Siemens cloud (PLM) Teamcenter X. .

By connecting NVIDIA Omniverse and Teamcenter Using the Omnibus API, tasks such as applying materials, lighting environments, and other supporting background assets to physical rendering can be accelerated through productive AI.

We also plan to introduce NVIDIA accelerated computing, generative AI and omnibus to more of the Siemens accelerator portfolio.

■ IBM

To help clients solve their business challenges, IBM Consulting plans to combine its technology and industry expertise with NVIDIA’s enterprise AI software stack, including new NIM microservices and omnibus technologies. IBM said this will accelerate customer AI workflows, improve use case-specific optimization, and develop business and industry-specific AI use cases.

IBM already uses Isaac Sim and Omniverse to build and deliver digital twin applications for supply chain and manufacturing.

■ Snowflake

Cloud data company Snowflake has expanded its previously announced partnership with NVIDIA to integrate Nemo Retriever. Productive AI microservices connect large custom language models (LLMs) to enterprise data and help customers improve the performance and scalability of chatbot applications built with Snowflake Cortex.

The collaboration also includes NVIDIA TensorRT software, which provides low latency and high throughput for deep learning inference applications.

In addition to Snowflake, data platform providers Box, Dataloop, Cloudera, Coherence, DataStax, and NetApp are also using NVIDIA microservices, including the all-new NIM technology, to optimize search augmented generation (RAG) pipelines and access proprietary data published plans to support integration into productive AI.

■ MedTech Johnson & Johnson

Johnson & Johnson MedTech is collaborating with NVIDIA to test new AI capabilities for use in a connected digital ecosystem for surgery. It aims to support healthcare professionals before, after and during operations by enabling open innovation and accelerating the delivery of insights at scale and in real time.

AI is already being used to connect, analyze and predict operating room data. AI is also expected to play an important role in the future of surgery by improving operating room efficiency and clinical decision making.

Johnson & Johnson MedTech supports 80% of the world’s operating rooms and trains more than 140,000 medical professionals each year through educational programs.

By combining NVIDIA’s long-standing technology and digital ecosystem in surgery with NVIDIA’s leading AI solutions, you can accelerate the infrastructure needed to deploy AI-based software applications for surgery. This includes the IGX edge computing platform and the Holoscan edge AI platform, which enable devices used in the operating room to be processed securely and in real time, providing clinical insights and improving surgical outcomes.

This technology can also facilitate the use of third-party models and applications developed across the digital surgery ecosystem by providing a common AI computing platform.

(Image = Nvidia)

■ Apple

Apple announced that it will implement NVIDIA Omnibus Enterprise Digital Twin based on OpenUSD in ‘Vision Pro’. A new software framework built on top of the Omnibus Cloud API, or API, enables developers to easily transfer Open USD industry views from content creation applications to the NVIDIA Graphics Delivery Network (GDN).

GDN is a global network of graphics-capable data centers that can stream advanced 3D experiences to Apple Vision Pro.

In a demo unveiled at GTC, NVIDIA showed off a physically accurate, interactive digital twin of a car streamed in full fidelity to Apple’s Vision Pro high-resolution display.

■ TSMC and Summary

NVIDIA announced support for the NVIDIA computational lithography platform at TSMC and Synopsis. As a result, TSMC and Synopsis have laid the groundwork to accelerate the manufacturing of next-generation cutting-edge semiconductor chips and overcome the limitations of current manufacturing technology.

Foundry company TSMC and semiconductor design company Synopsis have integrated NVIDIA cuLitho into software, manufacturing processes and systems to accelerate semiconductor chip manufacturing, and plan to support next-generation NVIDIA Blackwell architecture GPUs in the future.

NVIDIA also introduced a new generative AI algorithm that powers cuResource, a library for GPU-accelerated computational lithography. Through this, the semiconductor manufacturing process has been dramatically improved compared to the current CPU-based method.

■ Mellon Bank of New York

Bank of New York Mellon announced that it was the first global bank to build a DGX SuperPOD with the DGX H100 system. The system includes dozens of DGX systems, InfiniBand networking, and is based on the DGX Superpod reference architecture. As a result, Bank of New York Mellon said it will be able to experience computer processing performance it could not experience before.

Based on the new system, Bank of New York Mellon plans to use NVIDIA AI enterprise software to support building and deploying AI applications and managing AI infrastructure.

Reporter Park Chan cpark@aitimes.com

#Big #techs #signed #partnerships #NVIDIA #GTC