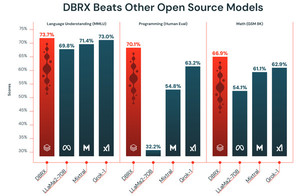

DBRX outperforms other open source LLMs on key benchmarks for language comprehension (MMLU), programming ability (Human Eval), and math performance (GSM 8K). (Image = Data Brick)

Databricks has released a new open source Large Language Model (LLM) with the highest performance available today. The aim is to support companies to build custom-made artificial intelligence (AI) with performance that surpasses all open source models, including Meta’s ‘Rama 2’.

TechCrunch reported on the 27th (local time) that Databricks launched the open source LLM ‘DBRX’ for building customized models for companies.

According to this, this model is divided into ▲’DBRX Base’, a basic version that can be run and refined on user-defined or proprietary data, and ‘DBRX Instruct’, a refined version. It can be downloaded for research and commercial use from GitHub and Hugging Face.

In particular, Databricks took full advantage of being a specialist data company. This means that companies can also interact with DBRX on the Databricks platform and build custom DBRX models with their own data. It can also be used on Microsoft Azure through AWS, Google Cloud, and Azure Databricks.

DBRX is an LLM containing 132 billion parameters and introducing a ‘Mixed Experts (MoE)’ architecture. That is, it contains 16 small specialized models and dynamically selects 4 that are highly relevant to each query. As a result, only 36 billion parameters out of 132 billion are implemented, enabling fast and cheap implementation.

Databricks announced that DBRX outperformed existing open source products such as Meta’s ‘Rama2 70B’ and ‘Mistral-8x7B’ in standard industry benchmarks such as language understanding, programming, mathematics and logic. Although lower than OpenAI’s ‘GPT-4’, it was also said to outperform ‘GPT-3.5’.

DBRX outperforms OpenAI’s GPT-3.5 on key benchmarks for language understanding (MMLU), programming ability (Human Eval), and math performance (GSM 8K). (Image = Data Brick)

“We are excited to share DBRX with the world and lead the industry towards more powerful and efficient open source AI,” said Ali Godsi, CEO of Databricks. “The goal is to build a model tailored for each company that really understands.”

He also explained that it took about two months to train DBRX and cost $10 million (about 13 billion won).

However, the problem is that it is very difficult to use DBRX if you are not a Databricks customer.

This is because running DBRX requires a server or PC with at least four NVIDIA ‘H100’ GPUs. The price of one H100 is thousands of dollars.

While companies can build their own infrastructure to use DBRX, this is difficult for many developers and individual business owners. It is possible to run the model on another cloud, but this is expensive due to the high hardware requirements.

In addition, Databricks provides its customers with an integrated solution of ‘Mosaic AI’ together with DBRX to rapidly build and deploy custom models that refine DBRX from custom data.

For this reason, there is an analysis that DBRX was released as open source to encourage corporate customers to use Databricks data resources to build their own AI models.

Reporter Park Chan cpark@aitimes.com

#Databricks #launches #open #source #model #surpasses #Rama #Mixtral